👨🏻💻 About Me

I am currently a second-year master student (preparing for PhD application) with the School of Computer Science, Shanghai Jiao Tong University (上海交通大学计算机学院). I have been a member of ReThinkLab since 2022 and supervised by Prof. Junchi Yan (严骏驰) who leads the lab. I obtained my Bachelor of Engineering Degree from the Department of Computer Science and Engineering (IEEE Honors Class, provincial top 80 in College Entrance Exam) of SJTU in 2024. My recent research interests include learning to solve complex discrete optimization problems (esp. neural combinatorial optimization), generative models, machine learning on graphs, and broader fields towards large decision-making models and scientific intelligence.

📖 Educations

- 2024.09 - now, School of Computer Science, SJTU (pursuing the Master’s Degree while preparing for PhD application)

- Overall:

- Courses:

- Overall:

- 2020.09 - 2024.06, Department of Computer Science and Engineering (IEEE Honors Class), SJTU (B.E. Degree obtained)

- Overall:

- Courses:

- Language:

- Overall:

🎖 Honors and Awards

- 2025.11 First-class Academic Scholarship of SJTU (研究生学业一等奖学金 top 10% in Dept.)

- 2025.10 National Scholarship for Graduate Student (研究生国家奖学金 top 2% nationwide)

- 2025.09 Merit Student of Shanghai Jiao Tong University (上海交通大学三好学生 top 8% in SJTU)

- 2024.06 Outstanding Graduate of Shanghai Jiao Tong University (上海交通大学优秀毕业生 top 10% in SJTU)

- 2021-2023 (Annual) Academic Excellence Scholarship (上海交通大学优秀奖学金 top 10% in Dept.)

- 2022.11 Huatai Securities Technology Scholarship (华泰证券科技奖学金 40 awarded in SJTU)

- 2021.11 SMC-Takada Scholarship (SMC高田奖学金 top 5% in Dept.)

- 2021.09 Merit Student of Shanghai Jiao Tong University (上海交通大学三好学生 top 8% in SJTU)

- 2021.05 Merit League Member of Shanghai Jiao Tong University (上海交通大学优秀团员 top 8% in SJTU)

- ……

📝 Publications

(CCF-A) ML4CO-Bench-101: Benchmark Machine Learning

for Classic Combinatorial Problems on Graphs [PDF][Code

Jiale Ma, Wenzheng Pan, Yang Li, Junchi Yan

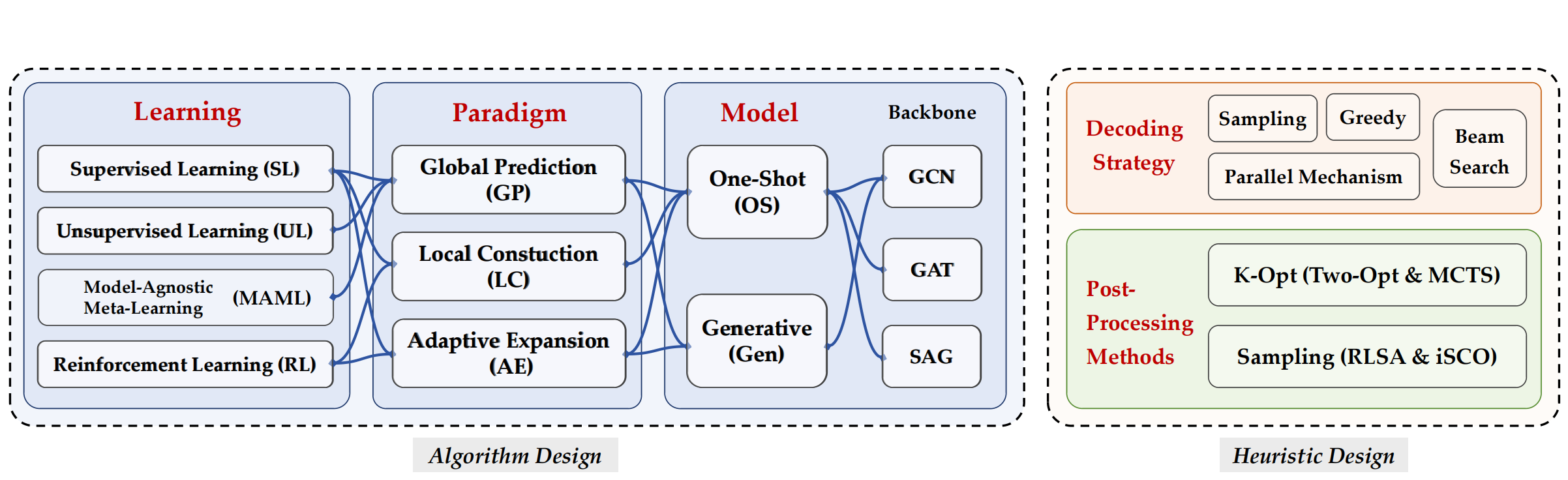

In this paper, we establish a modular and streamlined framework benchmarking prevalent neural CO methods, dissecting their design choices via a tri-leveled “paradigm-model-learning” taxonomy to better characterize different approaches. Further, we integrate their shared features and respective strengths to form 3 unified solvers representing global prediction (GP), local construction (LC), and adaptive expansion (AE) mannered neural solvers. We also collate a total of 34 datasets for 7 mainstream CO problems (including both edge-oriented tasks: TSP, ATSP, CVRP, as well as node-oriented: MIS, MCl, MVC, MCut) across scales to facilitate more comparable results among literature.

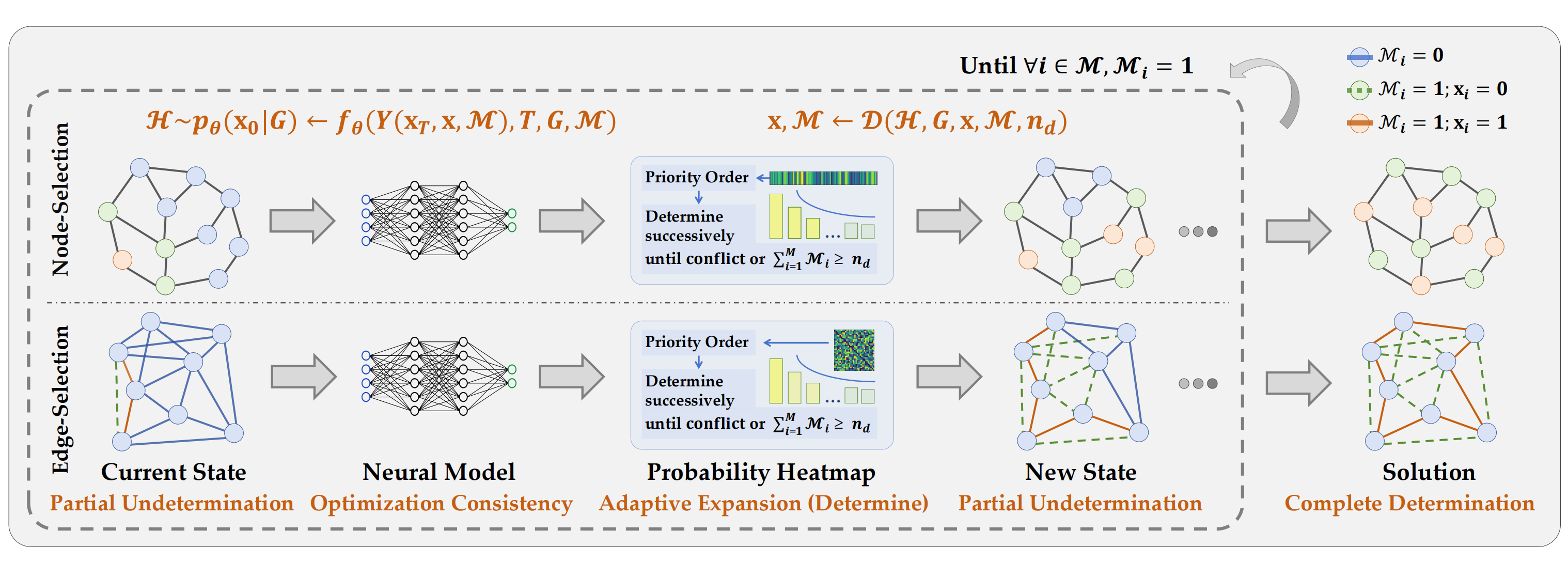

(CCF-A) COExpander: Adaptive Solution Expansion for Combinatorial Optimization [PDF][Code

Jiale Ma*, Wenzheng Pan*, Yang Li, Junchi Yan

- We propose a novel paradigm Adaptive Expansion (AE) and the COExpander solver for NCO solving. It bridges the global prediction (GP) and local construction (LC) paradigms via a partial state prompted heatmap generator with adaptive step sizes for decision-making.

- We re-wrap 5 non-learning baseline solvers and re-cononicalize 29 standard datasets to provide a standard benchmark for 6 commonly studied COPs.

- Compared with previous neural SOTA, COExpander has reduced the average optimality drop on 6 COPs from 3.81% to 0.66%, with a speedup of 4.0x.

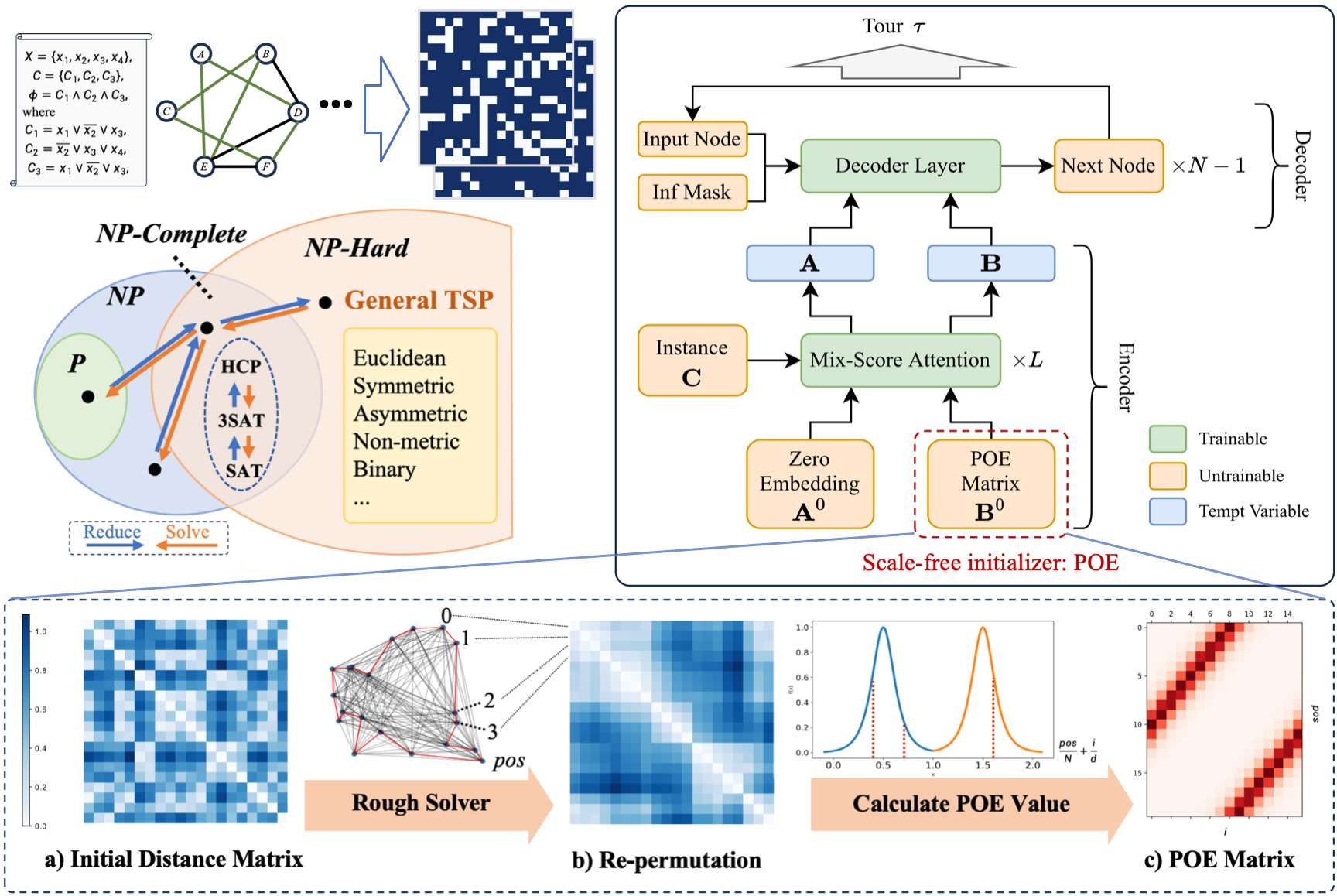

(CAAI/Tsinghua-A) UniCO: On Unified Combinatorial Optimization via Problem Reduction to Matrix-Encoded General TSP [PDF] [Code

Wenzheng Pan*, Hao Xiong*, Jiale Ma, Wentao Zhao, Yang Li, Junchi Yan

- We propose the UniCO framework to unify a set of CO problems by reducing them into the general TSP form for effective and simultaneous training.

- This work focuses on the challenging TSPs that are non-metric, asymmetric or discrete distances without explicit node coordinates.

- Two neural TSP solvers are devised w/ and w/o supervision to conquer such matrix input, respectively: 1) MatPOENet, an RL-based sequential model with pseudo one-hot embedding (POE) scheme and 2) MatDIFFNet, a Diffusion-based generative model with the mix-noised reference mapping scheme.

- Pioneering experiments have been conducted on ATSP, 2DTSP, HCP- and SAT-distributed general matrix-encoded TSPs.

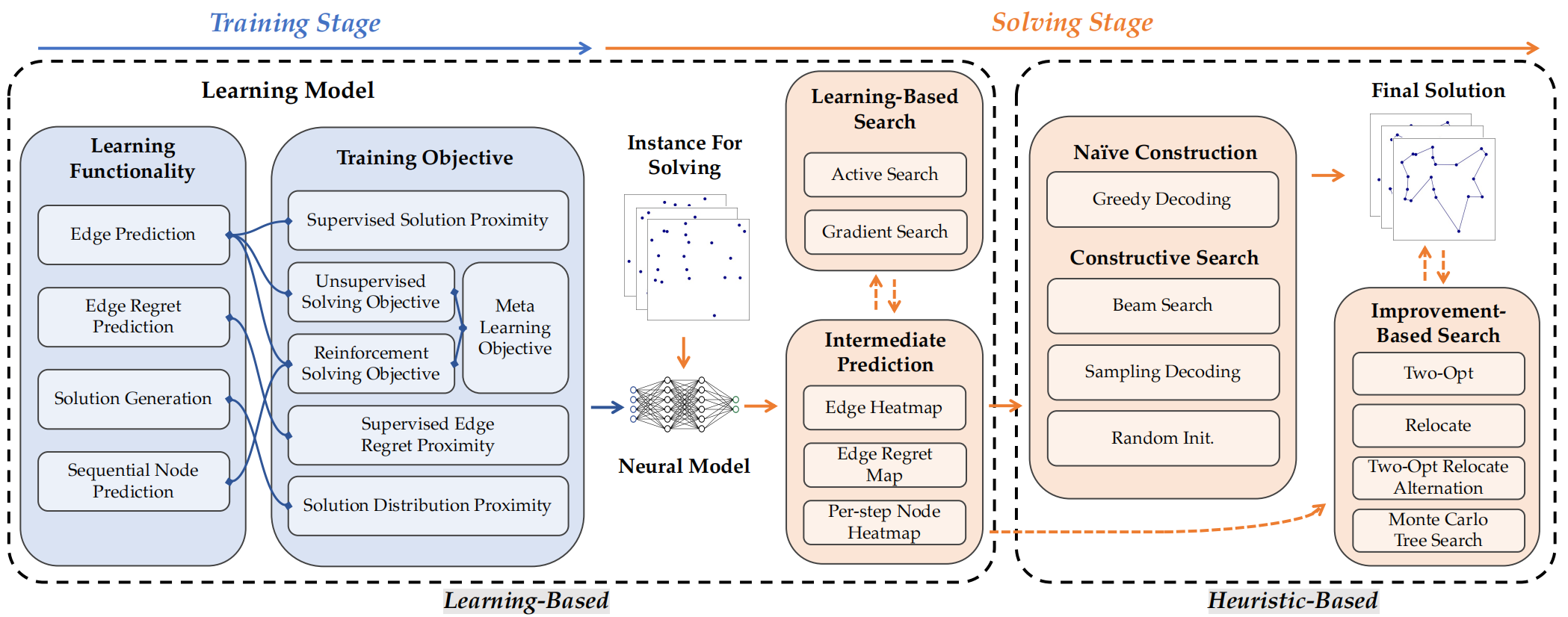

(CAAI/Tsinghua-A) Unify ML4TSP: Drawing Methodological Principles for TSP and Beyond from Streamlined Design Space of Learning and Search [PDF][Code

Yang Li, Jiale Ma, Wenzheng Pan, Runzhong Wang, Haoyu Geng, Nianzu Yang, Junchi Yan

- ML4TSPBench advances a unified modular streamline incorporating existing technologies in both learning and search for transparent ablation.

- The desired principles are joint probability estimation, symmetry solution representation, and online optimization, for ML4TSP solver design.

- The strategic decoupling and organic recompositions yield a factory of new and stronger TSP solvers.

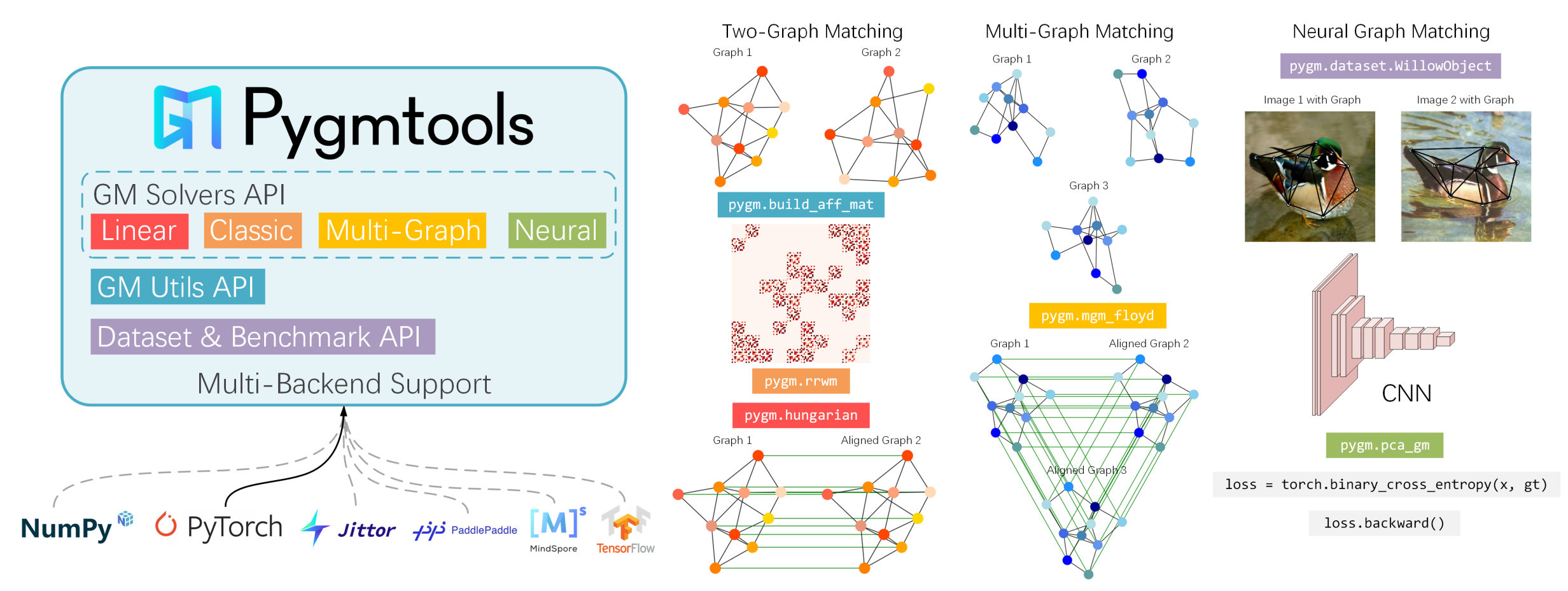

(CCF-A) Pygmtools: A Python Graph Matching Toolkit [PDF][Code

Runzhong Wang, Ziao Guo, Wenzheng Pan, Jiale Ma, Yikai Zhang, Nan Yang, Qi Liu, Longxuan Wei, Hanxue Zhang, Chang Liu, Zetian Jiang, Xiaokang Yang, Junchi Yan

- Pygmtools is released as a Python graph matching toolkit that implements a comprehensive collection of two-graph and multi-graph matching solvers.

- Our implementation supports numerical backends including Numpy, PyTorch, Jittor, Paddle, runs on Windows, MacOS and Linux, with friendly guidance.

⚙️ Open Source Projects

-

Awesome-ML4CO

, a curated collection of literature in the ML4CO field, organized to support researchers in accessing both foundational and recent developments. This repository is maintained with a joint effort by members in SJTU-Thinklab as well as contributors from the community.

-

ML4CO-Kit

, a general-purpose toolkit that provides implementations of common algorithms used in ML4CO, along with basic training frameworks, traditional solvers and data generation tools. It aims to simplify the implementation of key techniques and offer a solid base for developing machine learning models for COPs.

-

ML4TSPBench

, a benchmark focusing on exploring the TSP for representativeness. It offers a deep dive into various methodology designs, enabling comparisons and the development of specialized algorithms.

-

ML4CO-Bench-101

, a benchmark that categorizes neural combinatorial optimization (NCO) solvers by solving paradigms, model designs, and learning strategies. It evaluates applicability and generalization of different NCO approaches across a broad range of combinatorial optimization problems to uncover universal insights that can be transferred across various domains of ML4CO.

-

Pygmtools

, a Python graph matching toolkit that implements a comprehensive collection of two-graph matching and multi-graph matching solvers, covering both learning-free solvers as well as learning-based neural graph matching solvers. Our implementation supports numerical backends including Numpy, PyTorch, Jittor, Paddle, runs on Windows, MacOS and Linux, and is friendly to install and configure.

🔥 News

- 2025.10: 🏅 I was awarded the National Scholarship for Graduate Student!

- 2025.10: 🔍 I served as a reviewer for ICLR 2026 and AAMAS 2026!

- 2025.09: 🎉 One paper was accepted by NeurIPS 2025!

- 2025.05: 🎉 One paper was accepted by ICML 2025!

- 2025.01: 🎉 Two papers were accepted by ICLR 2025!

- 2024.10: 🔍 I served as a reviewer for ICLR 2025!

- 2024.06: 🏅 I was awarded the Outstanding Graduate of SJTU!

- 2024.01: 🎉 One paper was accepted by JMLR!

-blue)